Concordia University researchers have developed a faster and more accurate technique for creating highly detailed 3D models of large-scale landscapes. With this technique, digital replicas of real-world environments can be created down to the pixel level, making it easier for people to explore and navigate various areas. This innovation makes creating digital twins of the real world more accessible for various applications.

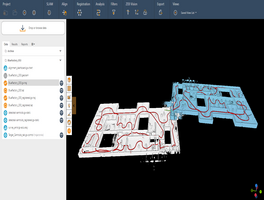

The new technique is called HybridFlow and utilizes highly detailed aerial images captured from aircraft flying at altitudes exceeding 30,000 feet (approx. 9,000m) to generate precise 3D models of cityscapes, landscapes and mixed areas, achieving a typical resolution of over 200 megapixels per image. By employing advanced processing techniques, the resulting models accurately represent the appearance and structure of the environment, down to the individual colors of structures.

Overcoming occlusion and repetition in 3D reconstruction

Unlike conventional 3D reconstruction methods that rely on identifying visual similarities between images to construct models, HybridFlow’s advanced approach mitigates issues such as occlusion and repetition, resulting in extraordinary accuracy.

In partnership, Charalambos Poullis, an associate professor of computer science and software engineering at the Gina Cody School of Engineering and Computer Science, and PhD student Qiao Chen, created the framework. “This digital twin can be used in typical applications to navigate and explore different areas, as well as virtual tourism, games, films and so on,” Poullis said. “More importantly, there are very impactful applications that can simulate processes in a secure and digital way. So, it can be used by stakeholders and authorities to simulate ‘what-if’ scenarios in cases of flooding or other natural disasters. This allows us to make informed decisions and evaluate various risk-mitigating factors.

No need for deep learning

Conventional 3D reconstruction methods rely on identifying visual similarities between images, but because images are often large, issues like occlusion and repetition can decrease accuracy. HybridFlow takes a different approach. Instead of matching key points in an image and propagating matches across an area, images are clustered into perceptually similar sections at the pixel level. This method allows for faster processing and more robust tracking of points across images, resulting in a highly accurate reproduction.

“It also eliminates the need for any deep learning technique, which would require a lot of training and resources,” Poullis said. “This is a data-driven method that can handle an arbitrarily large image set.”

Storing data on disk instead of in memory optimizes the data pipeline for efficient processing. Moreover, with the help of a remote computer, an average-sized model of an urban area can be generated in less than 30 minutes.

Charalambos Poullis and Qiao Chen are currently working with the city of Terrebonne to use the HybridFlow system for disaster planning. With this system, the city can create a digital twin of the area and simulate different flood scenarios. By introducing barriers like sandbags into the digital twin, the city can make informed decisions about how to respond to a flood. The goal is not to prevent flooding altogether, but to equip the city with better tools to prepare and respond to disasters.